Client: An enterprise-level EHS vendor.

Challenge: The client’s analytics process relied on Python scripts to pull data from the client’s REST API, but this setup was unstable and difficult to maintain. Frequent timeouts, massive JSON responses, and complex scripting made data syncs unreliable, while limited monitoring made failures difficult to detect and fix.

Solution: Softengi developed a direct, scheduled cloud publishing service based on the Data Lake approach to data storing and management.

What Is a Data Lake?

A data lake is a centralized storage system that holds large volumes of raw data (structured, semi-structured, and unstructured) in their original format until they are needed for analysis.

Traditional systems, such as databases or data warehouses, require structuring data before storing it, a process known as schema-on-write. A data lake employs the schema-on-read approach, which involves storing information as-is and determining its structure or interpretation when it is used.

A data lake represents a modern approach to storing and managing data, particularly for large-scale data and AI applications where flexibility and scalability are crucial.

- Structure: A data lake holds all types of information, including spreadsheets, sensor data, images, videos, and logs, without requiring prior organization.

- Flexibility: Data can be processed, cleaned, and analyzed later for purposes such as analytics, machine learning, or reporting.

- Scalability: Data lakes are typically built on scalable storage systems, such as Amazon S3, Azure Data Lake Storage, or Google Cloud Storage, enabling them to handle petabytes of data.

- Accessibility: Teams, including data scientists, analysts, and engineers, can access the same data for multiple use cases.

A data lake is like a massive digital reservoir where all of an organization’s data flows in and stays available for exploration and analysis later, without needing to decide in advance how it will be used.

Problem: Unreliable and Complex Data Flow

The client’s existing data flow for analytics relied on Python scripts running on their side to call our Rest API. This setup was plagued by several issues that made it unreliable and difficult to maintain:

- Timeouts and Failures: Connections and SQL queries frequently timed out, especially when handling the large responses required for analytical purposes.

- Large Data Size: The API calls returned data in JSON format, which is relatively large (approximately 10 times larger than Parquet).

- Complex Maintenance: The client had to manage Python scripting, handle complex incrementing logic, and manually deal with failed or timed-out calls.

- Poor Monitoring: The large data transfer calls were hard for our team to monitor, making it challenging to verify sync health or restart failed syncs.

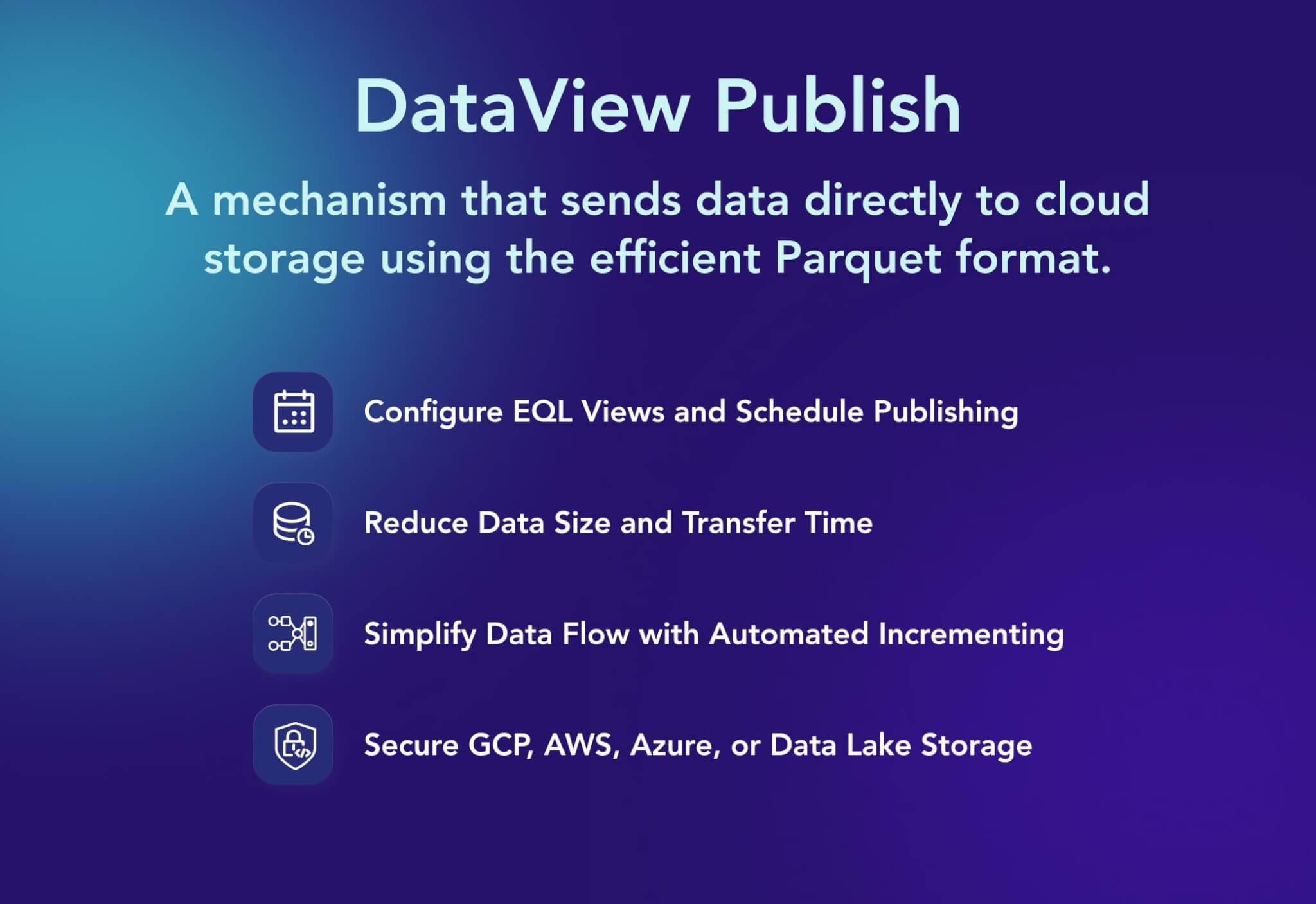

Solution: Direct, Scheduled Cloud Publishing

Softengi developed and implemented an internal DataView Publish mechanism to directly send data to the client’s cloud storage, leveraging the efficiency of the Parquet format. This is referred to as Option 2 in the high-level design. Key components of the solution include:

- EQL Views and Scheduling: The application user can configure EQL query Views (our system’s query language) via the UI to define the necessary datasets and schedule the publishing process using a Recurrent Service.

- Efficient Data Format: Data is transferred using the Parquet format, which is 5 times smaller than CSV and 10 times smaller than JSON, dramatically reducing transfer times and storage needs. A prototype for streaming data from MSSQL to Parquet was developed in C#.

- Automated Incrementing: The solution includes simple and auto-incrementing logic, where increments can be based on a “Date” column, such as Updated, simplifying the ongoing data flow.

- Storage Integration: The system is designed to securely store Parquet files directly in the client’s separate GCP/other cloud storage (e.g., AWS S3/Azure Storage, Data Lake V2, MSSQL).

Results

The new data lake approach to solving the client’s data storage and management challenge became a game-changer, representing a significant leap in reliability, efficiency, and user experience for large-scale data publishing.

- Increased performance.

- No client scripting.

- Future-ready with Deltalake.

- Optimized performance.

Data extraction and file preparation now run on the application side with built-in monitoring and timeouts, eliminating the connection and SQL timeout issues previously experienced by the client.

Complex Python scripts and manual API calls are no longer required, freeing the client from any scripting work. The solution is ready to adopt the Deltalake format for advanced incrementing, automatically handling data differences and providing an always up-to-date full result set without any deduplication effort.

Performance was optimized using Parquet, with a 6-million-workflow result set producing only a 0.6 GB file in under 3 minutes.

PEOPLE ALSO READ

AI-Powered Permit Analyzer for Regulatory Compliance

Softengi developed an AI application that streamlines the review of complex permit documents.

Mental Well-Being Clinic for Virryhealth

Softengi developed a virtual clinic with interactive and non-interactive activities where visitors could schedule therapy sessions with medical experts and visit a VR chat in the metaverse to interact with the amazing nature and animals of the African savanna.

AI-Based Visual Inspection Case Study

Explore how businesses improve production control quality with Ionbond – an automated visual inspection, classification, and anomaly detection solution.